Metrics and Preemptive Generation

Measuring performance and shaving latency

Your agent now sounds great and survives outages. Next, we need visibility. Metrics help you understand usage, latency, and failure modes.

We'll also add something called preemptive generation. This reduces response delay by streaming the first tokens while the user is still speaking.

Capturing usage metrics

Add these imports near the top of agent.py:

from livekit.agents import AgentStateChangedEvent, MetricsCollectedEvent, metrics

Inside entrypoint, before session.start(...), we'll wire up the collectors:

usage_collector = metrics.UsageCollector()

last_eou_metrics: metrics.EOUMetrics | None = None

@session.on("metrics_collected")

def _on_metrics_collected(ev: MetricsCollectedEvent):

nonlocal last_eou_metrics

if ev.metrics.type == "eou_metrics":

last_eou_metrics = ev.metrics

metrics.log_metrics(ev.metrics)

usage_collector.collect(ev.metrics)

async def log_usage():

summary = usage_collector.get_summary()

logger.info("Usage summary: %s", summary)

ctx.add_shutdown_callback(log_usage)

This captures per-turn statistics and logs a summary when the worker shuts down.

Tracking time to first audio

When the agent begins speaking, we want to know how long the human waited:

@session.on("agent_state_changed")

def _on_agent_state_changed(ev: AgentStateChangedEvent):

if (

ev.new_state == "speaking"

and last_eou_metrics

and session.current_speech

and last_eou_metrics.speech_id == session.current_speech.id

):

delta = ev.created_at - last_eou_metrics.last_speaking_time

logger.info("Time to first audio frame: %sms", delta.total_seconds() * 1000)

Keep an eye on this metric as you change providers or prompts.

Enabling preemptive generation

Preemptive generation lets the LLM start crafting a reply before the user fully finishes their sentence. Add this flag to AgentSession:

session = AgentSession(

# ...

preemptive_generation=True,

)

You can use our new metrics to compare before and after. You should see faster first audio frames, especially for long user turns.

Optional: Langfuse tracing

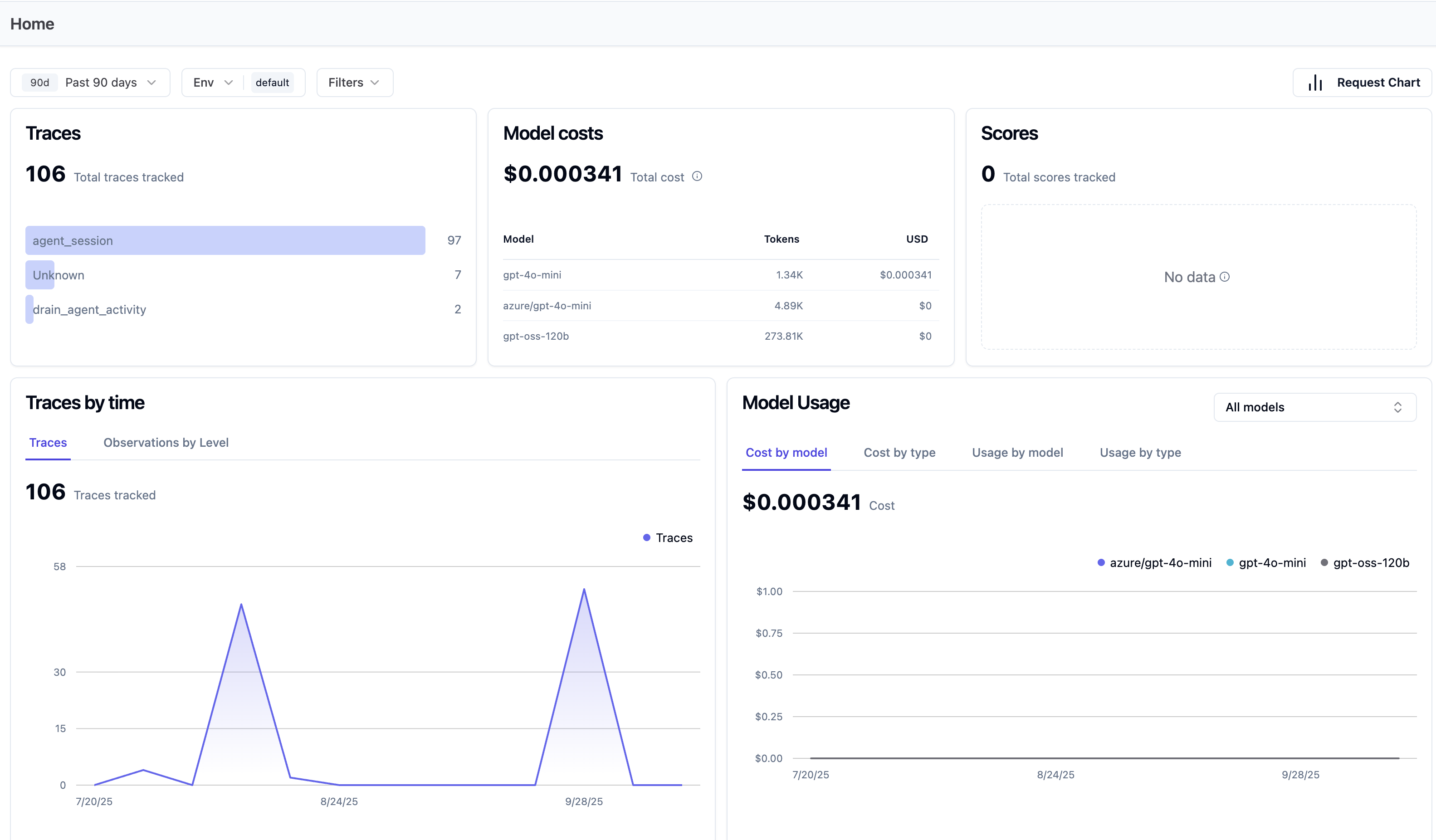

Langfuse is an open source platform that we can use to display our traces and metrics on an easy-to-grok dashboard.

This is what Langfuse looks like:

It doesn't require a credit card, and we'll get an API key right after signing up.

If you want end-to-end tracing in Langfuse:

- Add credentials to

.env.local:

LANGFUSE_PUBLIC_KEY=

LANGFUSE_SECRET_KEY=

LANGFUSE_HOST=

- Import the telemetry helpers:

from livekit.agents.telemetry import set_tracer_provider

import base64

import os

- Define a helper:

def setup_langfuse(host=None, public_key=None, secret_key=None):

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

public_key = public_key or os.getenv("LANGFUSE_PUBLIC_KEY")

secret_key = secret_key or os.getenv("LANGFUSE_SECRET_KEY")

host = host or os.getenv("LANGFUSE_HOST")

if not public_key or not secret_key or not host:

raise ValueError("LANGFUSE keys are required")

auth = base64.b64encode(f"{public_key}:{secret_key}".encode()).decode()

os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = f"{host.rstrip('/')}/api/public/otel"

os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = f"Authorization=Basic {auth}"

provider = TracerProvider()

provider.add_span_processor(BatchSpanProcessor(OTLPSpanExporter()))

set_tracer_provider(provider)

- Call it at the top of

entrypointif you want traces:

async def entrypoint(ctx: JobContext):

setup_langfuse()

# rest of setup…

And that's it! All of your call traces and telemetry will now be sent to Langfuse in addition to being reported in the console by your agent.

You don't need to use Langfuse, but it's helpful to know how you can push telemetry to any opentelemetry compatible endpoint using your Agent.

In this section we added:

- Console logs show per-component metrics and time-to-first-audio

- Latency improved when you enabled

preemptive_generation - Optional tracing emits spans to Langfuse when configured

With metrics in place, you can make data-driven decisions as you add tools and workflows in upcoming lessons.

Latency budget and pipeline streaming

Low-latency voice requires parallel work across the stack:

- Stream everything: STT, LLM, and TTS should operate incrementally, not in big batches.

- Parallelize: start TTS as soon as the LLM emits the first words; don’t wait for a full sentence.

- Avoid blocking I/O: keep tool calls and storage async; bound timeouts and retries.

- Keep prompts tight: fewer tokens means faster first audio and lower cost.

Track where time goes: STT first-token, LLM first-token, TTS first-audio, and overall time-to-first-audio per turn, and compare to your target budget (aim for <1000ms in the large majority of cases)

LLMs that give higher quality responses may have a higher time to first token. This may be worth the trade for you, but you may want to trade away latency on another part of the stack to keep response times fast.

This is one of the biggest benefits of having total control over the pipeline. You can optimize for exactly what your business needs.

Why WebRTC for voice

- HTTP (TCP) adds head-of-line blocking and lacks audio semantics; good for text, bad for real time.

- WebSockets improve persistence but still ride on TCP with the same blocking issues.

- WebRTC builds on UDP with audio-first features: Opus compression, per-packet timestamps, and real-time network adaptation for conversational latency.

Metrics that you should track

- Time to first LLM token (TTFT)

- User interruption/barge-in rate

- Tool latency and failure rate; fallback activations

- Time to first audio frame (TTFA)

- STT accuracy proxies (e.g., correction requests, intent reversals)